一、名词解释

1.1 OpenEuler操作系统

openEuler是一款开源操作系统。当前openEuler内核源于Linux,支持鲲鹏及其他多种处理器,能够充分释放计算芯片的潜能,是由全球开源贡献者构建的高效、稳定、安全的开源操作系统,适用于数据库、大数据、云计算、人工智能等应用场景。同时,openEuler是一个面向全球的操作系统开源社区,通过社区合作,打造创新平台,构建支持多处理器架构、统一和开放的操作系统,推动软硬件应用生态繁荣发展。

1.2 K8S容器编排引擎

Kubernetes 是一个开源的容器编排引擎,用来对容器化应用进行自动化部署、 扩缩和管理。该开源项目由云原生计算基金会 CNCF托管。

1.3 RKE部署工具

RKE是一款经过CNCF认证的开源Kubernetes发行版,可以在Docker容器内运行。 它通过删除大部分主机依赖项,并为部署、升级和回滚提供一个稳定的路径,从而解决了 Kubernetes最常见的安装复杂性问题。 借助RKE,Kubernetes可以完全独立于正在运行的操作系统和平台,轻松实现Kubernetes的自动化运维。 只要运行受支持的Docker版本,就可以通过RKE部署和运行Kubernetes。仅需几分钟,RKE便可通过单条命令构建一个集群,其声明式配置使Kubernetes升级操作具备原子性且安全

- RKE目前有两个分支

1.4 Rancher管理平台

Rancher是目前业界应用最为广泛的企业级Kubernetes管理平台

二、环境准备

2.1 集群主机配置要求

部署Kubernetes集群机器需要满足以下几个条件:

- 一台或多台机器,操作系统 OpenEuler

- 硬件配置:2GB或更多RAM,2个CPU或更多CPU,硬盘100GB或更多

- 集群中所有机器之间网络互通

- 可以访问外网,需要拉取镜像,如果服务器不能上网,需要提前下载镜像并导入节点

- 禁止swap分区

2.2 版本配置

Rancher和RKE对环境版本有着严格的控制,部署之前一定要确定好各个软件版本,参考:https://www.suse.com/zh-cn/suse-rancher/support-matrix/all-supported-versions

| 名称 | 版本 |

|---|---|

| OpenEuler | |

| Docker | v20.10.7 |

| RKE | v1.2.23 |

| K8S | v1.20.15-rancher2-1 |

| Rancher | v2.5.16 |

| helm |

2.3 集群主机名称、IP地址及角色规划

| 主机名称 | IP地址 | 角色 |

|---|---|---|

| master01 | 192.168.35.41 | rancher、controlpane、etcd、rke |

| worker01 | 192.168.35.42 | worker |

| worker02 | 192.168.35.43 | worker |

三、集群配置

3.1 集群主机名称配置

修改各主机名称:

所有主机均要配置

hostnamectl set-hostname xxx

[root@localhost ~]# hostnamectl set-hostname master01

[root@localhost ~]# hostnamectl set-hostname worker01

[root@localhost ~]# hostnamectl set-hostname worker02

主机名与IP地址解析(修改hosts):

所有主机均要配置

[root@localhost ~]# vi /etc/hosts

[root@localhost ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

# Add the following host:

192.168.35.41 master01

192.168.35.42 worker01

192.168.35.43 worker02

配置ip_forward及过滤机制

所有主机均要配置 将桥接的IPv4流量传递到iptables的链

[root@localhost ~]# vim /etc/sysctl.conf

[root@localhost ~]# cat /etc/sysctl.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

动态加载内核模块 br_netfilter,该模块提供了对网桥(Bridge)的网络过滤功能支持

[root@localhost ~]# modprobe br_netfilter

重新加载并应用系统核心参数配置文件 /etc/sysctl.conf 中的设置。执行此命令后,新的内核参数设置会立即生效,无需重启系统。

[root@localhost ~]# sysctl -p /etc/sysctl.conf

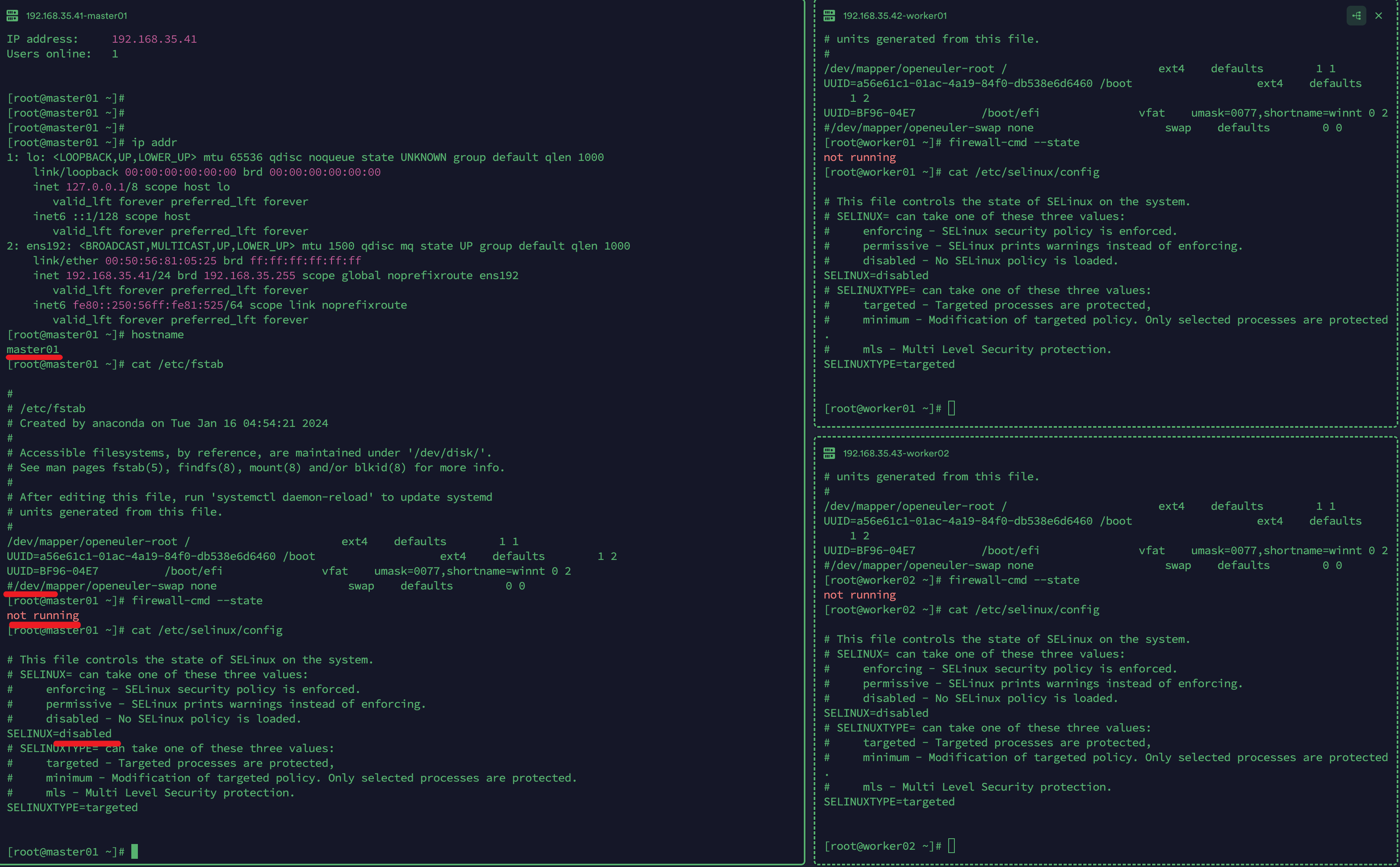

3.2 主机安全设置

3.2.1 关闭防火墙

[root@localhost ~]# systemctl stop firewalld

[root@localhost ~]# systemctl disable firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@localhost ~]# firewall-cmd --state

not running

[root@localhost ~]#

3.2.2 关闭selinux

永久关闭,一定要重启操作系统后生效

[root@localhost ~]# sed -ri 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

临时关闭

[root@localhost ~]# setenforce 0

3.2.3 禁用主机swap

永久关闭,需要重启操作系统生效。

[root@localhost ~]# sed -ri 's/.*swap.*/#&/' /etc/fstab

或

[root@localhost ~]# vi /etc/fstab

注释掉swap配置

[root@localhost ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Tue Jan 16 04:54:21 2024

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

/dev/mapper/openeuler-root / ext4 defaults 1 1

UUID=a56e61c1-01ac-4a19-84f0-db538e6d6460 /boot ext4 defaults 1 2

UUID=BF96-04E7 /boot/efi vfat umask=0077,shortname=winnt 0 2

#/dev/mapper/openeuler-swap none swap defaults 0 0

临时关闭,不需要重启操作系统,即刻生效。

[root@localhost ~]# swapoff -a

3.2.4 配置时间同步

所有主机都需要配置

[root@master01 ~]# yum -y install ntpdate

添加时间同步

[root@master01 ~]# crontab -e

0 */1 * * * ntpdate time1.aliyun.com

[root@master01 ~]# date

3.2.5 重启所有主机并检查配置

配置检查:

[root@master01 ~]# firewall-cmd --state

not running

[root@master01 ~]# sestatus

SELinux status: disabled

[root@master01 ~]# free -m

total used free shared buff/cache available

Mem: 15404 3388 904 25 10726 12016

Swap: 0 0 0

[root@master01 ~]# crontab -l

0 */1 * * * ntpdate time1.aliyun.com

四、Docker部署

4.1 DNF安装Docker-ce

所有主机都需要安装

更新DNF包管理:

[root@master01 ~]# dnf update -y

安装必要软件包:

[root@master01 ~]# dnf install -y dnf-plugins-core

[root@master01 ~]# dnf install dnf-utils device-mapper-persistent-data lvm2 fuse-overlayfs wget

如果出现安装docker报错

Requires: fuse-overlayfs >= 0.7或No match for argument: fuse-overlayfs添加centos源:

[root@master01 ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo https://repo.huaweicloud.com/repository/conf/CentOS-7-reg.repo

[root@master01 ~]# sed -i 's+$releasever+7+' /etc/yum.repos.d/CentOS-Base.repo

[root@master01 ~]# dnf clean all

添加 Docker CE 存储库:

[root@master01 ~]# dnf config-manager --add-repo=https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo

注意:在修改

docker-ce.repo原文件之前,为了保险可以执行如下命令先copy一份留作备份。

[root@master01 ~]# cp /etc/yum.repos.d/docker-ce.repo docker-ce.repo.bak

替换docker-ce.repo 中官方地址为华为开源镜像,提升下载速度

[root@master01 ~]# sed -i 's+download.docker.com+repo.huaweicloud.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

注意:

docker-ce.repo中用$releasever变量代替当前系统的版本号,该变量在CentOS中有效,但在openEuler中无效,所以将该变量直接改为8或7。

[root@master01 ~]# sed -i 's+$releasever+7+' /etc/yum.repos.d/docker-ce.repo

[root@master01 ~]# cat /etc/yum.repos.d/docker-ce.repo

更新索引缓存:

dnf makecache

安装docker-ce、docker-ce-cli、containerd.io:

dnf install -y docker-ce-20.10.7 docker-ce-cli-20.10.7 containerd.io

docker启动:

[root@master01 ~]# docker version

Client: Docker Engine - Community

Version: 20.10.7

API version: 1.41

Go version: go1.13.15

Git commit: f0df350

Built: Wed Jun 2 11:56:24 2021

OS/Arch: linux/amd64

Context: default

Experimental: true

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

[root@master01 ~]# systemctl enable docker

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /usr/lib/systemd/system/docker.service.

[root@master01 ~]# systemctl start docker

配置Docker容器镜像加速器、和镜像仓库地址:

[root@master01 ~]# vim /etc/docker/daemon.json

[root@master01 ~]# cat /etc/docker/daemon.json

{

"data-root": "/data",

"registry-mirrors": [

"https://docker.mirrors.ustc.edu.cn",

"https://registry.cn-hangzhou.aliyuncs.com",

"https://mirror.ccs.tencentyun.com",

"http://hub-mirror.c.163.com",

"https://dockerhub.azk8s.cn",

"https://registry.docker-cn.com",

"https://docker.m.daocloud.io",

"https://dockerhub.timeweb.cloud",

"https://noohub.ru",

"https://huecker.io"

], "insecure-registries": [

"harbor.jmu.edu.cn"

]

}

[root@master01 ~]# systemctl daemon-reload

[root@master01 ~]# systemctl restart docker

4.2 安装docker compose

dnf查看可用的docker-compose版本:

[root@master01 ~]# dnf list available docker-compose-plugin --showduplicates

Available Packages

....

docker-compose-plugin.x86_64 2.21.0-1.el8 docker-ce-stable

安装稳定版本2.21.0:

[root@master01 ~]# dnf install -y docker-compose-plugin-2.21.0

[root@master01 ~]# docker compose version

Docker Compose version v2.21.0

五、添加rancher用户

不能使用 root 账号,因此要添加专用的账号进行 docker相关操作。 所有集群主机均需要操作

[root@master01 ~]# useradd rancher

[root@master01 ~]# usermod -aG docker rancher

[root@master01 ~]# echo xxx | passwd --stdin rancher

更改用户 rancher 的密码 。

passwd:所有的身份验证令牌已经成功更新。

六、生成ssh证书用于部署集群

rke二进制文件安装主机上创建密钥,即为control主机,用于部署集群。 在Master01上操作

生成ed25519类型SSH Key:

[root@master01 ~]# ssh-keygen -t ed25519

Generating public/private ed25519 key pair.

Enter file in which to save the key (/root/.ssh/id_ed25519):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_ed25519

Your public key has been saved in /root/.ssh/id_ed25519.pub

The key fingerprint is:

SHA256:WvkHr68IuVbDYQDBq9C/GbUrb33exp2PmDiCgY0F2ZM root@master01

The key's randomart image is:

+--[ED25519 256]--+

| .o= . |

| + E |

| . o o |

|. . . o o. |

| . o * +S.. |

| . = +++. o |

| ++* ...o. . |

| + =oo.o=ooo. |

| =o .+=== ...|

+----[SHA256]-----+

注意:默认ssh-keygen生成的是RSA类型的ssh key,但是OpenEuler默认不支持该类型的ssh key,会导致RKE部署时报错 具体可以

cat /etc/ssh/sshd_config查看一下PubkeyAcceptedKeyTypes这个配置项:

PubkeyAcceptedKeyTypes ssh-ed25519,ssh-ed25519-cert-v01@openssh.com,rsa-sha2-256,rsa-sha2-512

配置免密登录(把主机的公钥复制到远程主机):

[root@master01 ~]# ssh-copy-id -i ~/.ssh/id_ed25519.pub rancher@master01

[root@master01 ~]# ssh-copy-id -i ~/.ssh/id_ed25519.pub rancher@worker01

[root@master01 ~]# ssh-copy-id -i ~/.ssh/id_ed25519.pub rancher@worker02

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_ed25519.pub"

The authenticity of host 'worker02 (192.168.35.43)' can't be established.

ED25519 key fingerprint is SHA256:wi93eN0gvkZ4pWPYtgSX9497+yZa/03uWlXX6prO7PY.

This host key is known by the following other names/addresses:

~/.ssh/known_hosts:1: master01

~/.ssh/known_hosts:3: worker01

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

Authorized users only. All activities may be monitored and reported.

rancher@worker02's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'rancher@worker02'"

and check to make sure that only the key(s) you wanted were added.

测试ssh免密登录:

[root@master01 ~]# ssh rancher@master01

[root@master01 ~]# ssh rancher@worker01

[root@master01 ~]# ssh rancher@worker02

# 检查是否有docker的执行权限

[rancher@worker02 ~]$ docker ps

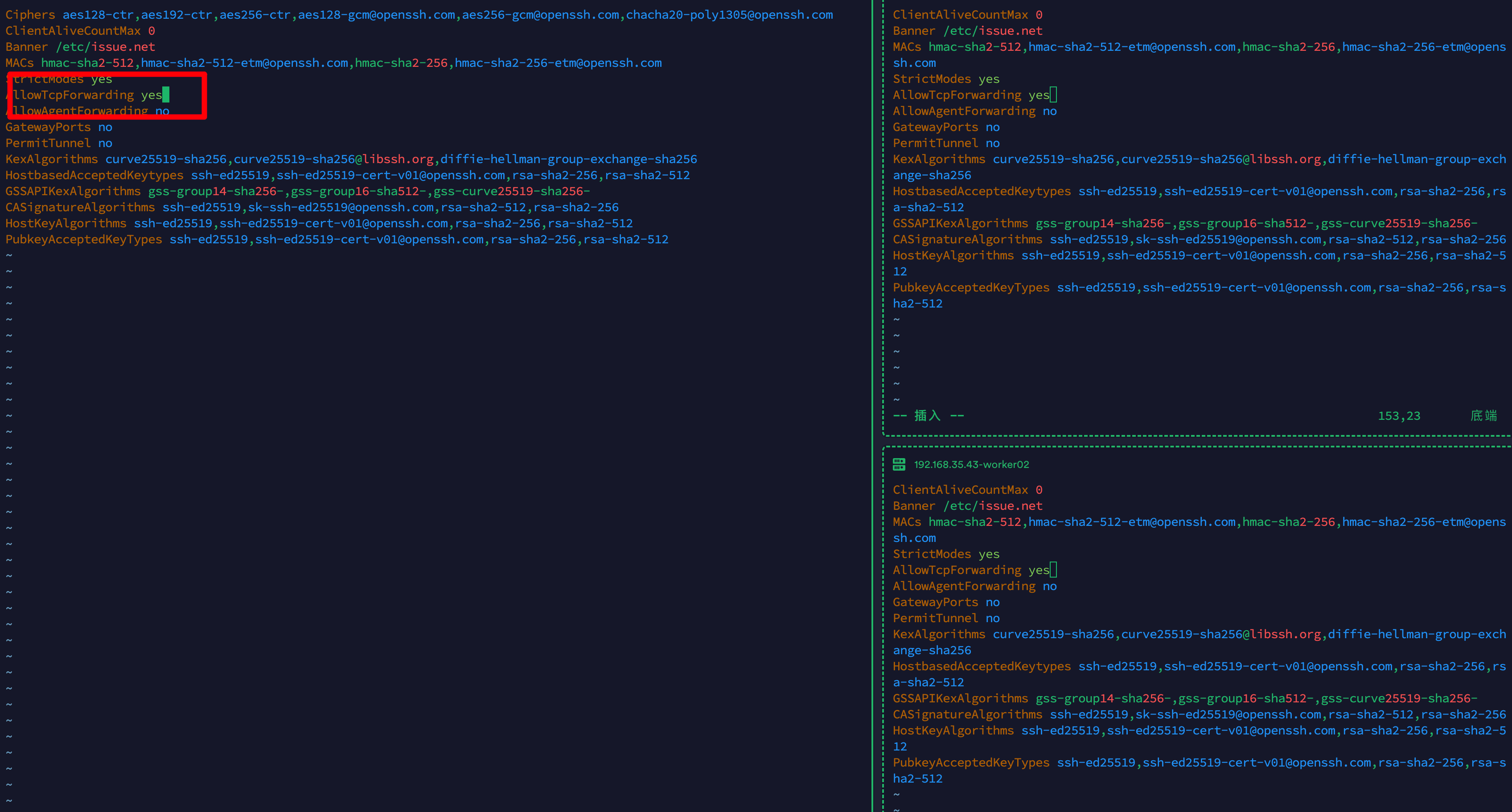

配置/etc/ssh/sshd_config 的 SSH 服务器系统范围配置文件必须包含以下允许 TCP 转发的行:

[root@master01 ~]# vim /etc/ssh/sshd_config

AllowTcpForwarding yes

[root@master01 ~]# systemctl restart sshd

配置如图:

七、RKE工具

在Master01上操作

7.1 RKE工具下载

[root@master01 ~]# wget https://github.com/rancher/rke/releases/download/v1.2.23/rke_linux-amd64

[root@master01 ~]# cp rke_linux-amd64 /usr/local/bin/rke

[root@master01 ~]# chmod +x /usr/local/bin/rke

[root@master01 ~]# rke --version

rke version v1.2.23

7.2 初始化rke配置文件

创建配置文件目录:

[root@master01 ~]# mkdir -p /app/rancher

[root@master01 ~]# cd /app/rancher

生成RKE配置文件:

[root@master01 rancher]# rke config --name cluster.yml

[+] Cluster Level SSH Private Key Path [~/.ssh/id_rsa]: ~/.ssh/id_ed25519

[+] Number of Hosts [1]: 3

[+] SSH Address of host (1) [none]: 192.168.35.41

[+] SSH Port of host (1) [22]:

[+] SSH Private Key Path of host (192.168.35.41) [none]: ~/.ssh/id_ed25519

[+] SSH User of host (192.168.35.41) [ubuntu]: rancher

[+] Is host (192.168.35.41) a Control Plane host (y/n)? [y]: y

[+] Is host (192.168.35.41) a Worker host (y/n)? [n]: n

[+] Is host (192.168.35.41) an etcd host (y/n)? [n]: y

[+] Override Hostname of host (192.168.35.41) [none]:

[+] Internal IP of host (192.168.35.41) [none]:

[+] Docker socket path on host (192.168.35.41) [/var/run/docker.sock]:

[+] SSH Address of host (2) [none]: 192.168.35.42

[+] SSH Port of host (2) [22]:

[+] SSH Private Key Path of host (192.168.35.42) [none]: ~/.ssh/id_ed25519

[+] SSH User of host (192.168.35.42) [ubuntu]: rancher

[+] Is host (192.168.35.42) a Control Plane host (y/n)? [y]: n

[+] Is host (192.168.35.42) a Worker host (y/n)? [n]: y

[+] Is host (192.168.35.42) an etcd host (y/n)? [n]: n

[+] Override Hostname of host (192.168.35.42) [none]:

[+] Internal IP of host (192.168.35.42) [none]:

[+] Docker socket path on host (192.168.35.42) [/var/run/docker.sock]:

[+] SSH Address of host (3) [none]: 192.168.35.43

[+] SSH Port of host (3) [22]:

[+] SSH Private Key Path of host (192.168.35.43) [none]: ~/.ssh/id_ed25519

[+] SSH User of host (192.168.35.43) [ubuntu]: rancher

[+] Is host (192.168.35.43) a Control Plane host (y/n)? [y]: n

[+] Is host (192.168.35.43) a Worker host (y/n)? [n]: y

[+] Is host (192.168.35.43) an etcd host (y/n)? [n]: n

[+] Override Hostname of host (192.168.35.43) [none]:

[+] Internal IP of host (192.168.35.43) [none]:

[+] Docker socket path on host (192.168.35.43) [/var/run/docker.sock]:

[+] Network Plugin Type (flannel, calico, weave, canal, aci) [canal]:

[+] Authentication Strategy [x509]:

[+] Authorization Mode (rbac, none) [rbac]:

[+] Kubernetes Docker image [rancher/hyperkube:v1.20.15-rancher2]:

[+] Cluster domain [cluster.local]:

[+] Service Cluster IP Range [10.43.0.0/16]:

[+] Enable PodSecurityPolicy [n]:

[+] Cluster Network CIDR [10.42.0.0/16]:

[+] Cluster DNS Service IP [10.43.0.10]:

[+] Add addon manifest URLs or YAML files [no]:

如果后面需要部署kubeflow或istio则一定要在cluster.yaml文件中配置以下参数

kube-controller:

image: ""

extra_args:

# 如果后面需要部署kubeflow或istio则一定要配置以下参数

cluster-signing-cert-file: "/etc/kubernetes/ssl/kube-ca.pem"

cluster-signing-key-file: "/etc/kubernetes/ssl/kube-ca-key.pem"

7.3 RKE部署K8S集群

[root@master01 ~]# cd /app/rancher

[root@master01 rancher]# rke up

八、安装kubectl客户端

在master01主机上操作

下载kubectl客户端工具:

[root@master01 rancher]# cd ~

[root@master01 ~]# wget https://storage.googleapis.com/kubernetes-release/release/v1.20.15/bin/linux/amd64/kubectl

kubectl客户端配置集群管理文件及应用验证:

[root@master01 rancher]# cd ~

[root@master01 ~]# ls

anaconda-ks.cfg docker-ce.repo.bak kubectl rke_linux-amd64

[root@master01 ~]# chmod +x kubectl

[root@master01 ~]# cp kubectl /usr/local/bin/kubectl

[root@master01 ~]# kubectl version --client

Client Version: version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.15", GitCommit:"8f1e5bf0b9729a899b8df86249b56e2c74aebc55", GitTreeState:"clean", BuildDate:"2022-01-19T17:27:39Z", GoVersion:"go1.15.15", Compiler:"gc", Platform:"linux/amd64"}

[root@master01 ~]# mkdir ./.kube

[root@master01 ~]# cp /app/rancher/kube_config_cluster.yml /root/.kube/config

[root@master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.35.41 Ready controlplane,etcd 2m7s v1.20.15

192.168.35.42 Ready worker 2m2s v1.20.15

192.168.35.43 Ready worker 2m2s v1.20.15

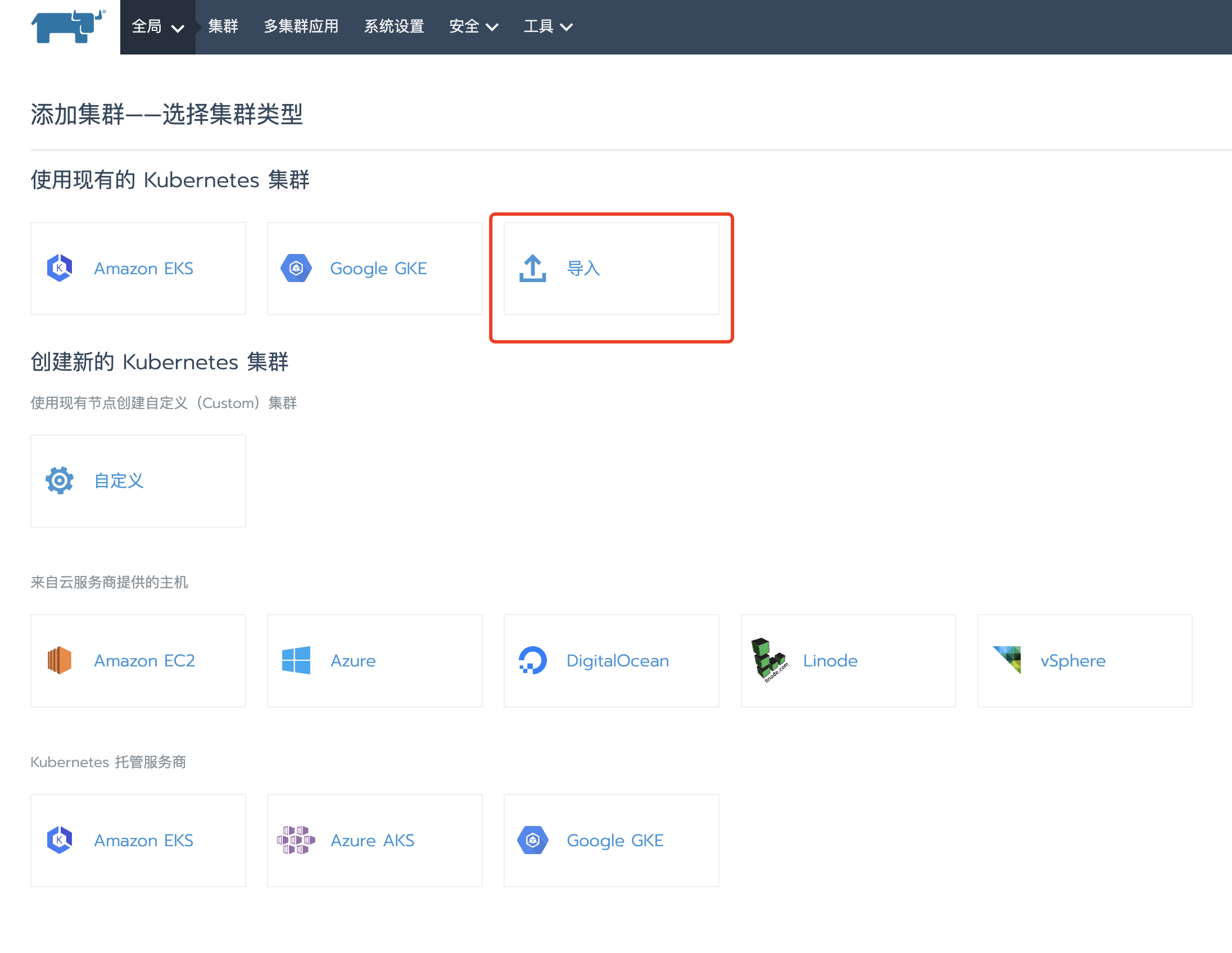

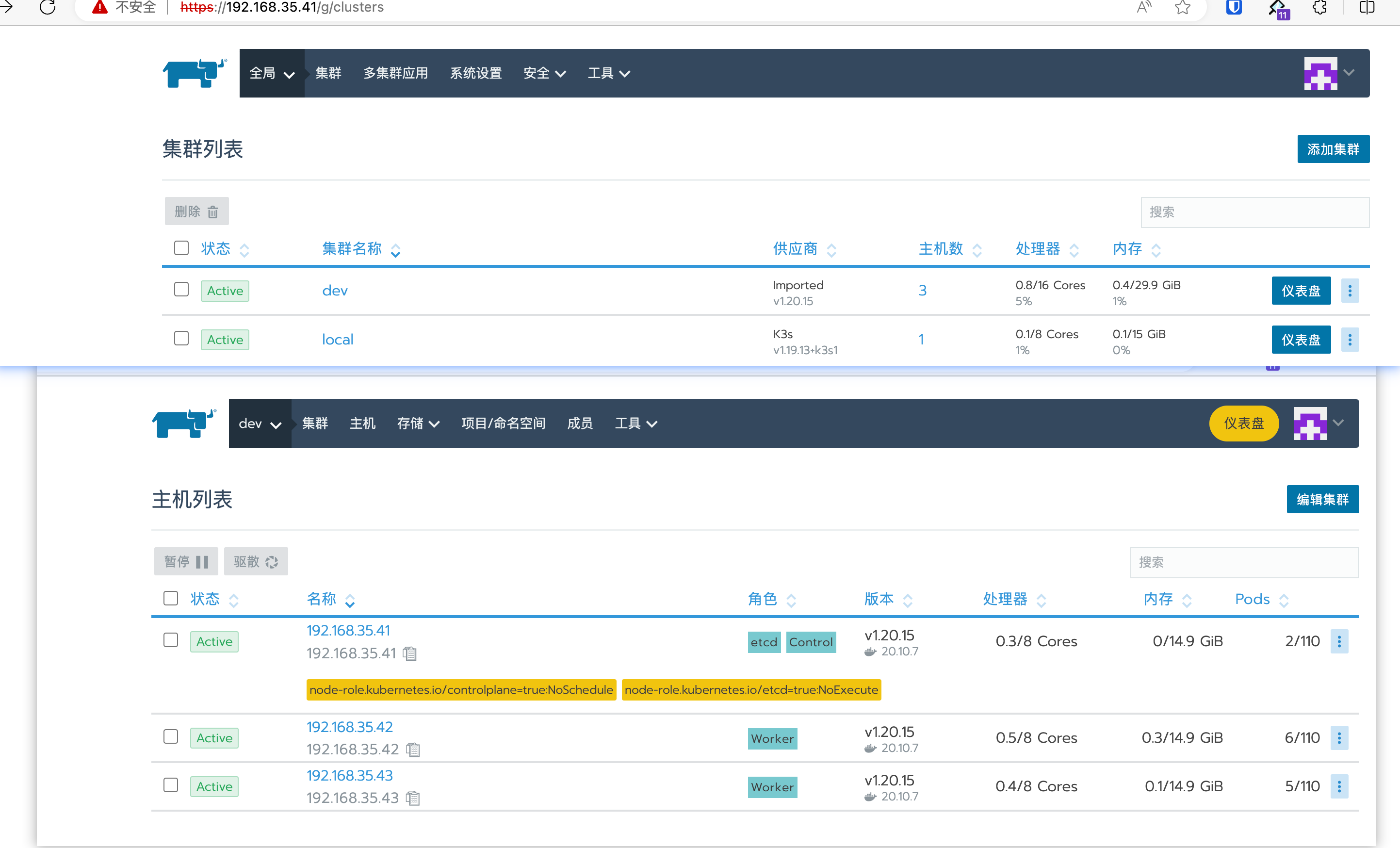

九、部署rancher

Docker方式单节点部署:

[root@master01 ~]# docker run -d --restart=unless-stopped --privileged --name rancher -p 80:80 -p 443:443 rancher/rancher:v2.5.16

选择导入集群:

导入集群配置:

执行命令

[root@master01 ~]# kubectl apply -f https://192.168.35.41/v3/import/96d577rfzrc4m94d9vlvd2gp5k6n5m88dl6q8scff9zggfp95fg6lw_c-k7pr2.yaml

Unable to connect to the server: x509: certificate is valid for 127.0.0.1, 172.17.0.2, not 192.168.35.41

[root@master01 ~]# curl --insecure -sfL https://192.168.35.41/v3/import/96d577rfzrc4m94d9vlvd2gp5k6n5m88dl6q8scff9zggfp95fg6lw_c-k7pr2.yaml | kubectl apply -f -

error: no objects passed to apply

[root@master01 ~]# curl --insecure -sfL https://192.168.35.41/v3/import/96d577rfzrc4m94d9vlvd2gp5k6n5m88dl6q8scff9zggfp95fg6lw_c-k7pr2.yaml | kubectl apply -f -

Warning: resource clusterroles/proxy-clusterrole-kubeapiserver is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterrole.rbac.authorization.k8s.io/proxy-clusterrole-kubeapiserver configured

Warning: resource clusterrolebindings/proxy-role-binding-kubernetes-master is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterrolebinding.rbac.authorization.k8s.io/proxy-role-binding-kubernetes-master configured

namespace/cattle-system created

serviceaccount/cattle created

clusterrolebinding.rbac.authorization.k8s.io/cattle-admin-binding created

secret/cattle-credentials-a18a68a created

clusterrole.rbac.authorization.k8s.io/cattle-admin created

deployment.apps/cattle-cluster-agent created

等待导入成功后:

十、更新节点配置

10.1 节点准备(添加节点)

10.1.1 节点操作系统安装

添加一台节点环境也需要一致。安装docker, 创建用户,关闭swap等

10.1.2 集群主机名称配置

hostnamectl set-hostname xxx

10.1.3 集群主机IP地址配置

10.1.4 主机名与IP地址解析(各主机)

# vim /etc/hosts

# cat /etc/hosts

......

192.168.10.10 master01

192.168.10.11 master02

192.168.10.12 worker01

192.168.10.13 worker02

192.168.10.14 etcd01

10.1.5 配置ip_forward及过滤机制

# vim /etc/sysctl.conf

# cat /etc/sysctl.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

# modprobe br_netfilter

# sysctl -p /etc/sysctl.conf

10.1.6 关闭防火墙

# systemctl stop firewalld

# systemctl disable firewalld

10.1.7 关闭selinux(需要重启操作系统)

sed -ri 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

10.1.8 主机swap分区设置(需要重启操作系统)

# sed -ri 's/.*swap.*/#&/' /etc/fstab

10.1.9 时间同步

# yum -y insall ntpdate

# crontab -e

0 */1 * * * ntpdate time1.aliyun.com

10.1.10 安装Docker和Docker compose

10.1.11 添加rancher用户

# useradd rancher

# usermod -aG docker rancher

# echo xxx | passwd --stdin rancher

10.1.12 复制ssh证书

ssh-copy-id rancher@worker02

10.1.13 验证ssh证书是否可用

在rke二进制文件安装主机机测试连接其它集群主机,验证是否可使用docker ps命令即可。

# ssh rancher@worker02

远程主机# docker ps

10.2 添加节点

使用–update-only添加或删除 worker 节点时,可能会触发插件或其他组件的重新部署或更新。

RKE 支持为 worker 和 controlplane 主机添加或删除节点。 可以通过修改cluster.yml文件的内容,添加额外的节点,并指定它们在 Kubernetes 集群中的角色;或从cluster.yml中的节点列表中删除节点信息,以达到删除节点的目的。

通过运行rke up –update-only,您可以运行rke up –update-only命令,只添加或删除工作节点。这将会忽略除了cluster.yml中的工作节点以外的其他内容。

10.2.1 编辑cluster.yml文件

# vim cluster.yml

......

- address: 192.168.10.13

port: "22"

internal_address: ""

role:

- worker

hostname_override:

user: "rancher"

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

......

10.2.2 RKE增加节点

rke up --update-only

10.3 移除worker节点

修改cluster.yml文件,将对应节点信息删除即可。

[root@master01 rancher]# rke up --update-only

[root@master01 rancher]# kubectl get nodes

但是worker节点上的pod是没有结束运行的。如果节点被重复使用,那么在创建新的 Kubernetes 集群时,将自动删除 pod。

10.4 增加etcd节点

10.4.1 修改cluster.yml文件

10.4.2 执行rke up命令

rke up --update-only

10.4.3 查看添加结果

[root@master01 rancher]# kubectl get nodes

[root@etcd01 ~]# docker exec -it etcd /bin/sh

# etcdctl member list

常见问题

Failed to set up SSH tunneling for host [x.x.x.x]: Can’t retrieve Docker Info: error during connect:

- 已配置rke到node节点的rancher to rancher 免密登录

- rke节点: ssh rancher@xxx.xxx.xxx.xxx 可以正常登录

- 免密登录都正常,并且也都能执行docker命令。 /etc/ssh/sshd_config添加如下配置:

AllowTcpForwarding yes

Error response from daemon: Get “http://192.168.15.205/v2/”: dial tcp 192.168.15.205:80: connect: connection refused

[root@localhost leader]# vim /etc/docker/daemon.json

[root@localhost leader]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://bvyp19gi.mirror.aliyuncs.com"],

"insecure-registries": [

"192.168.15.205"

]

}

[root@localhost leader]# systemctl daemon-reload

[root@localhost leader]# systemctl restart docker

...